Runway Gen-2 Video AI: What Is It and How to Use It?

Written by Ivana Kotorchevikj We Tested

Generative AI is moving at such a breakneck speed that we see new AI tools spring up every day, promising to simplify every aspect of our lives. Until recently, users were amazed by the text-to-image platform Midjourney with its capabilities to create mystical, stunning images.

However, recently there’s a new talk of the AI town - Runway’s launch of Gen-2 - a multi-model that generates videos from text prompts, images, and other videos. Runway is not the only pioneer in the field - there are a whole bunch of tech companies developing or offering AI-powered text-to-video generation.

But what exactly is the Runway Gen 2 Video AI model? What sets it apart from the AI tools we've seen before? And how can it be used to transform different sectors? Let's delve into the world of this groundbreaking AI model to answer these questions and more.

What is Gen-2?

Imagine a world where custom-tailored video content can be created with a few lines of text and where unique, high-quality animations can be generated in moments for your advertising needs, business, and education needs.

Welcome to the era of the Gen-2 Video AI model, a revolutionary tool that is rewriting the rules of content creation. This advanced mode transforms text, images, and video clips into high-quality videos, pushing the boundaries of what we thought was possible.

More importantly, Gen-2 is the first commercially available text-to-video AI model. There are many other similar models being developed by tech giants, but they remain in the research stage and are available only to engineers.

Runway features

Gen-2 relies on artificial intelligence and machine learning and takes the input of text, image, or an existing video to the generated video. The model analyzes the input's composition and style and applies it to the custom video.

The Gen-2 text-to-video generator is the latest and most popular feature on the Runway. However, there are many other AI-powered features generating and editing images and videos:

- Gen-1 video to video generator - Changes the style of a video with text or image.

- Remove background - Removes, blurs, or replaces the video background.

- Text-to-image generator - Generates images with text prompts.

- Image-to-image generator - Edits and transforms images with text prompts.

- Train your own generator - Creates custom portraits, animals, styles, etc.

- Infinite image - Expands your image with text-to-image generation.

- Expand image - Expands the edges of your images.

- Frame interpolation - Turns a sequence of images into an animated video.

- Erase and Replace - Remixes any part of your image.

- Backdrop Remix - Gives any photo infinite backgrounds.

- Image variation - Generates variations of images.

- 3D texture - Generates 3D texture of an image with text prompts.

- Inpainting - Removes objects and people from videos.

- Color grade (LUT) - Color grades videos with text prompts.

- Super-slow motion - turns a video into super-slow motion.

- Blur Faces - Detects and hides faces from videos.

- Depth of field - Adjusts the depth of field in videos.

- Extract Depth - Automatically generates a video depth map.

- Scene Detection - Automatically depicts and splits footage into clips.

- Clean Audio - Removes unwanted background noise from video.

- Remove Silence - Removes silence from audio and video.

- Transcript - Transcribes a video to text.

- Subtitles - Generates subtitles for videos.

- Add Color - Colorized black and white images.

- Upscale Image - Increases the resolution of images.

- Motion Tracking - Tracks moving objects in the video.

The Runway also features an Academy with video tutorials helping users get a grip on the different tools.

User profile

To use Gen-2, you need to create an account on the Runway platform, where you can manage your credits, subscriptions, username, email, etc.

Conveniently, all your generated videos are stored in your ‘Assets’ tab. You can organize your videos in folders and share them with your associates.

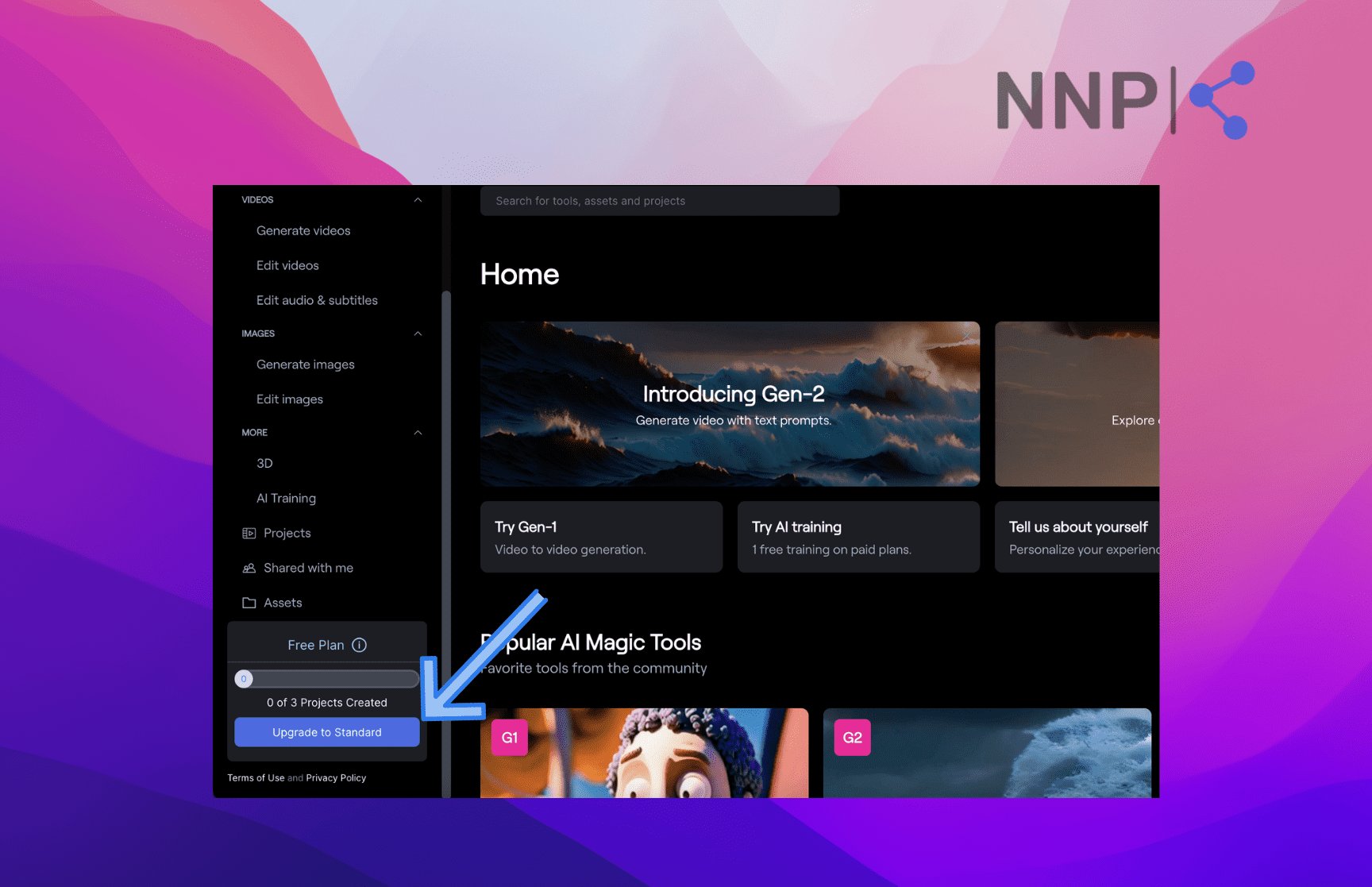

Additionally, you can create ‘Projects’ for better workflow. (Your free plan is limited to 3 projects; if you want to create more projects, you need to upgrade your subscription)

Helpful guide: How to use Gen-2

Here’s how to generate videos with Runway’s Gen-2.

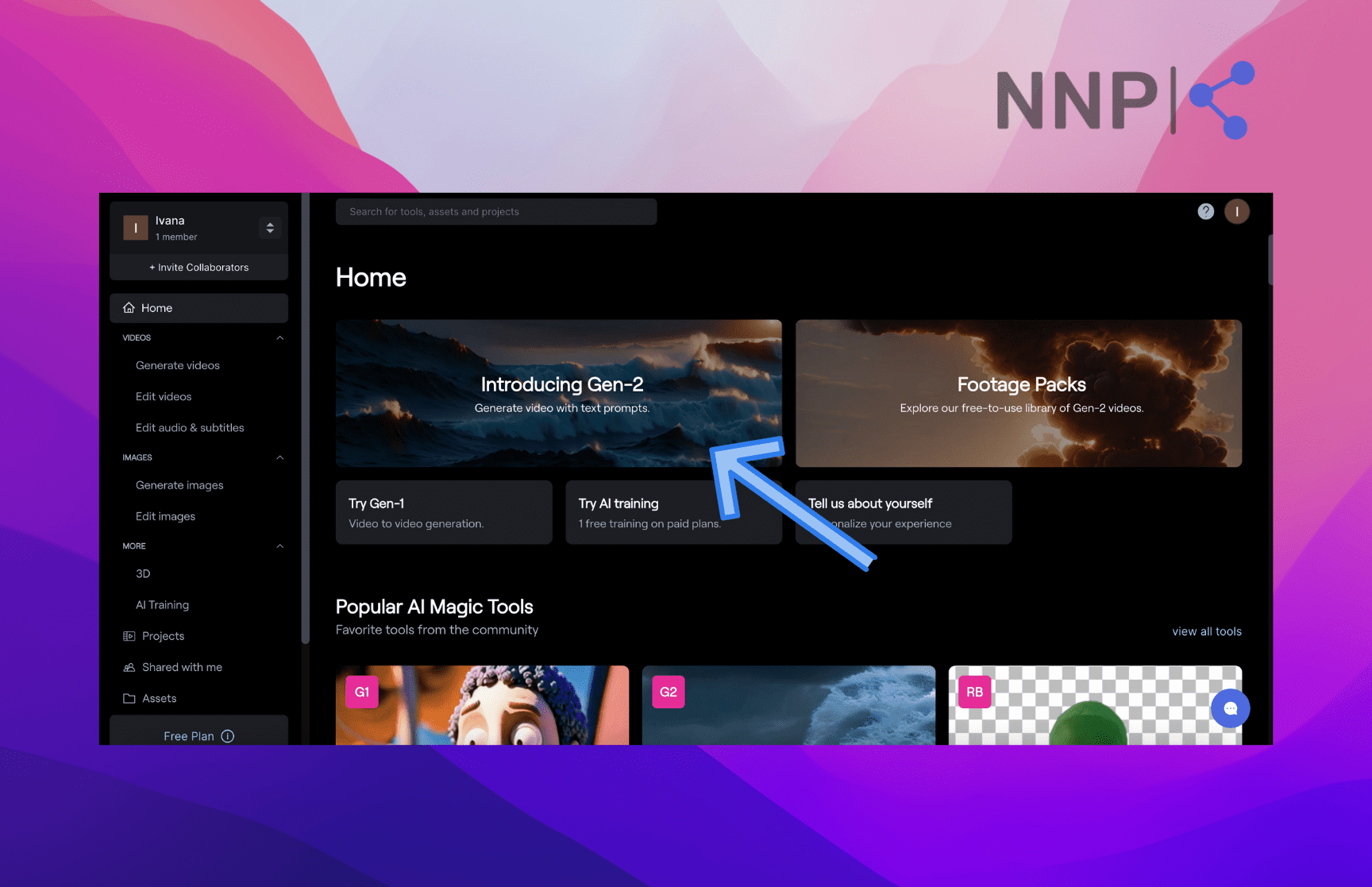

- Go to the Runway Gen-2 page and click on ‘Sign in to Runway.’ Alternatively, open the Runway dashboard.

- Sign up on the platform.

- Next, click on the ‘Introducing Gen-2’ banner.

- You’ll be redirected to the Gen-2 page.

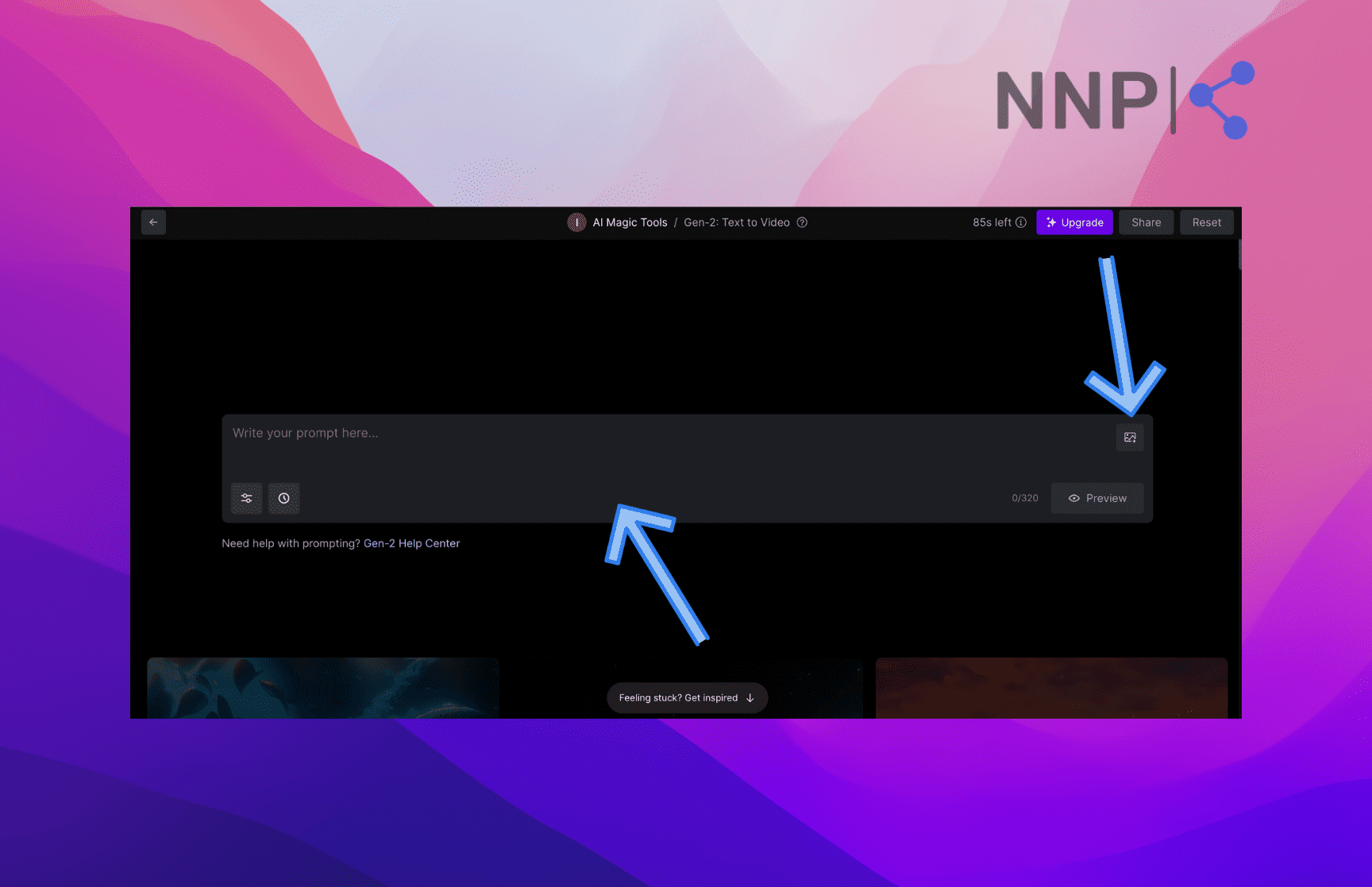

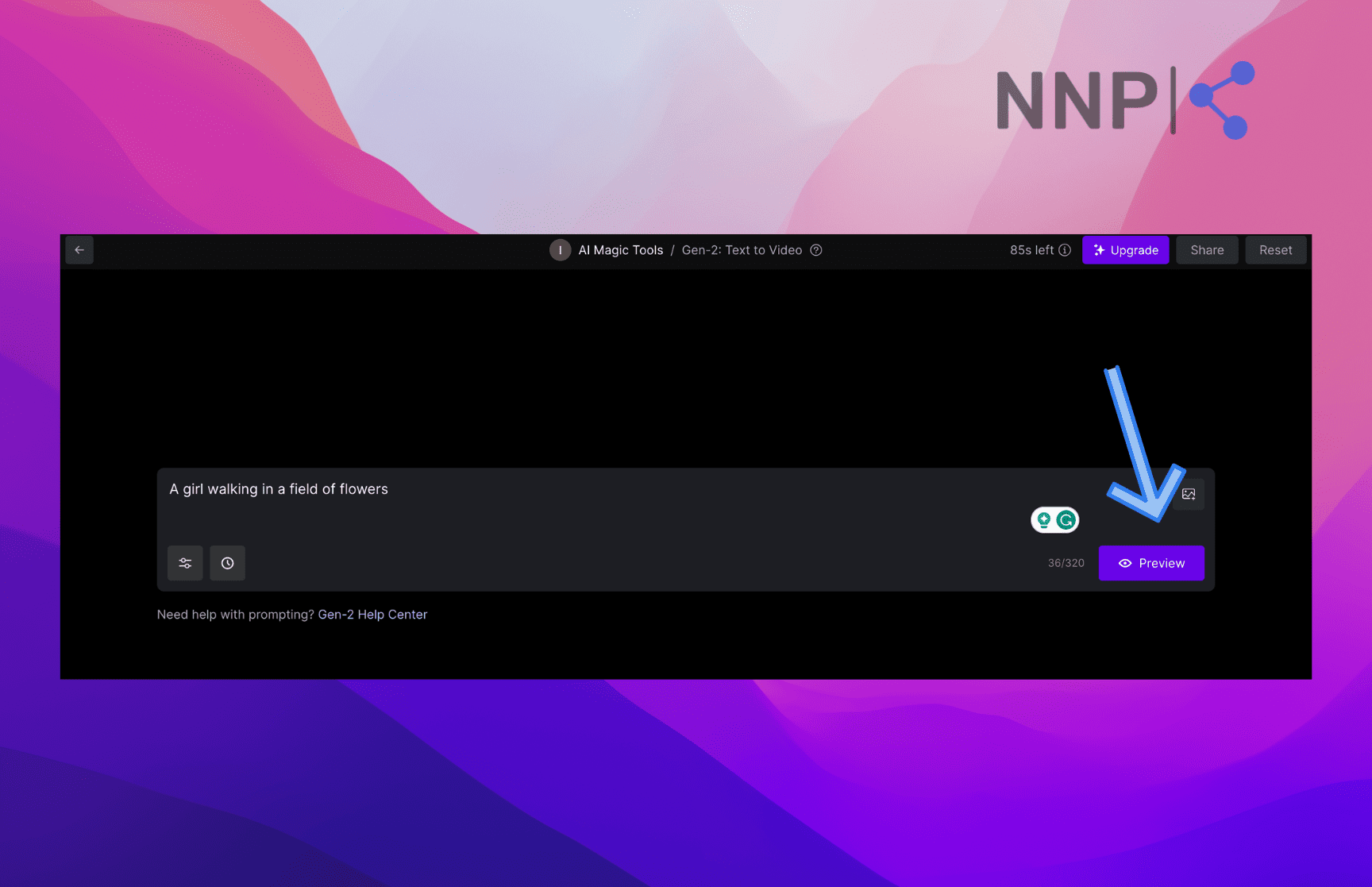

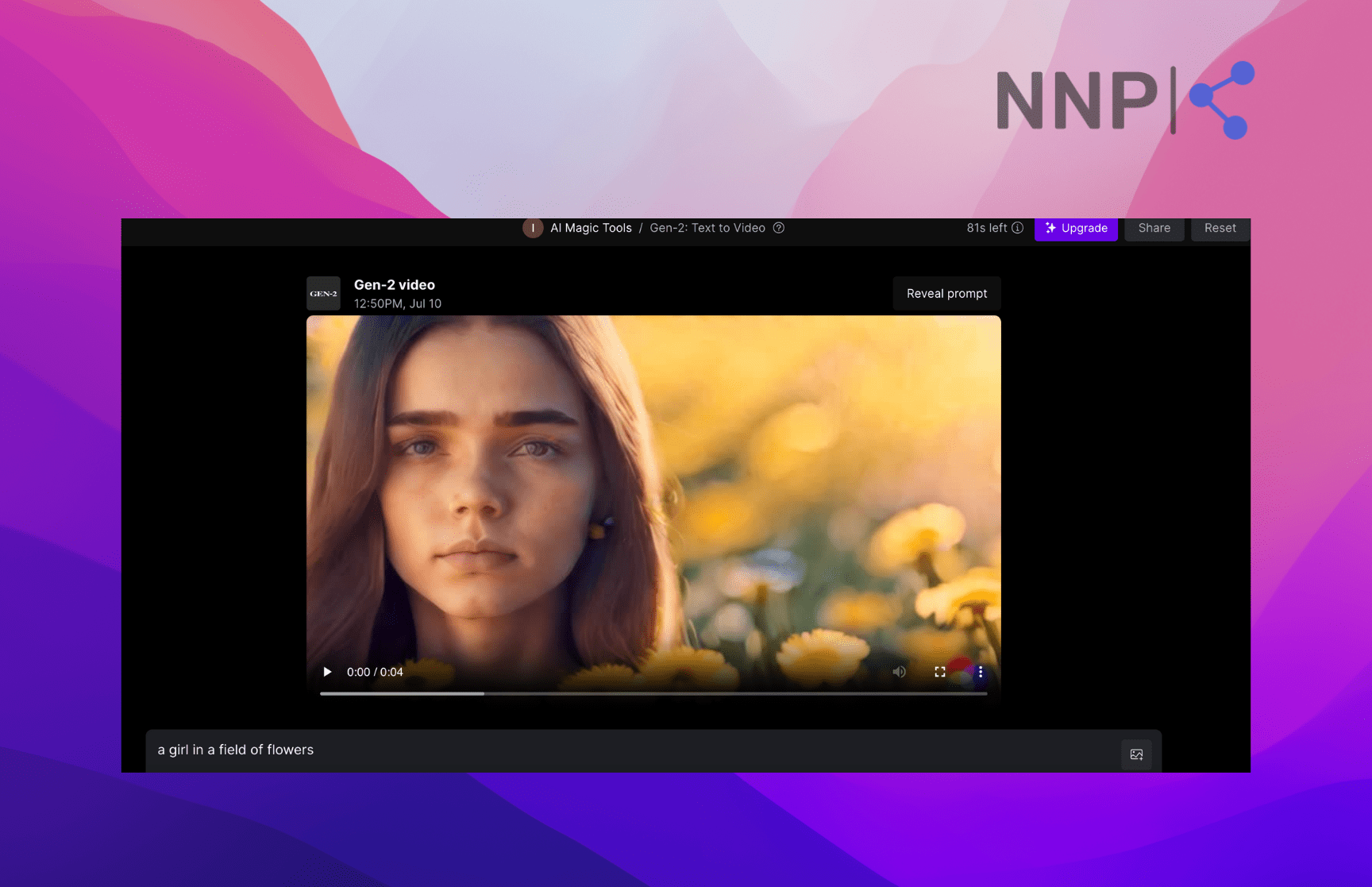

- Enter your prompt in the text field. (Note: Make sure to be as descriptive as possible)

- You can also upload an image to use as a basis for creating a video by clicking on the ‘image’ icon in the top-right corner of the text box and uploading an image.

- Then, click on the ‘Preview’ button in the bottom-right corner.

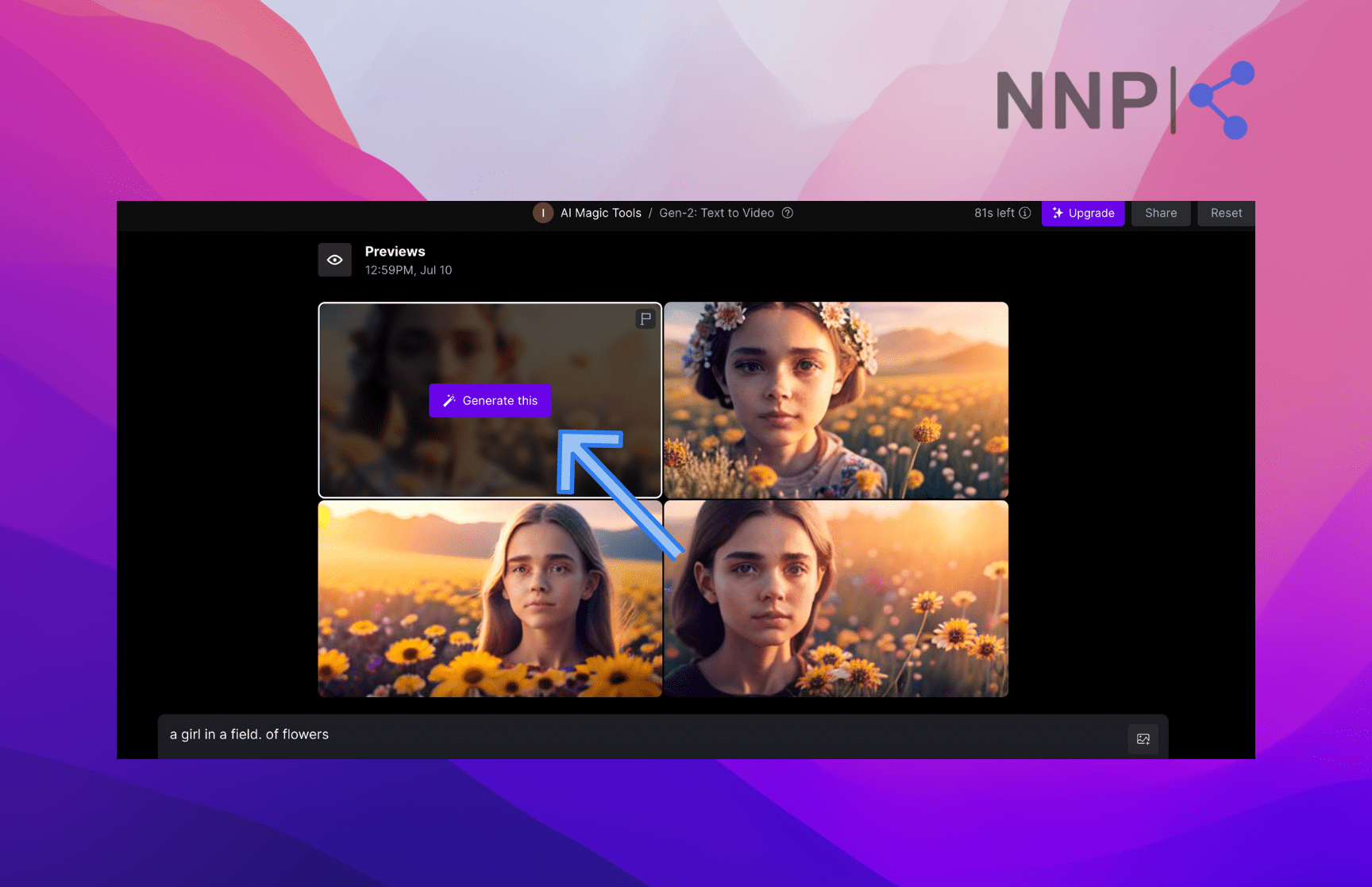

- You’ll get 4 image previews. Hover over your desired preview and click on ‘Generate this’ to generate a video.

- Your video will generate in a few seconds up to a minute.

The video is saved to ‘Assets’ in the left-side panel.

Verdict

For the sake of this article, I tested the Gen-2 model to see it in action. I used the prompt:

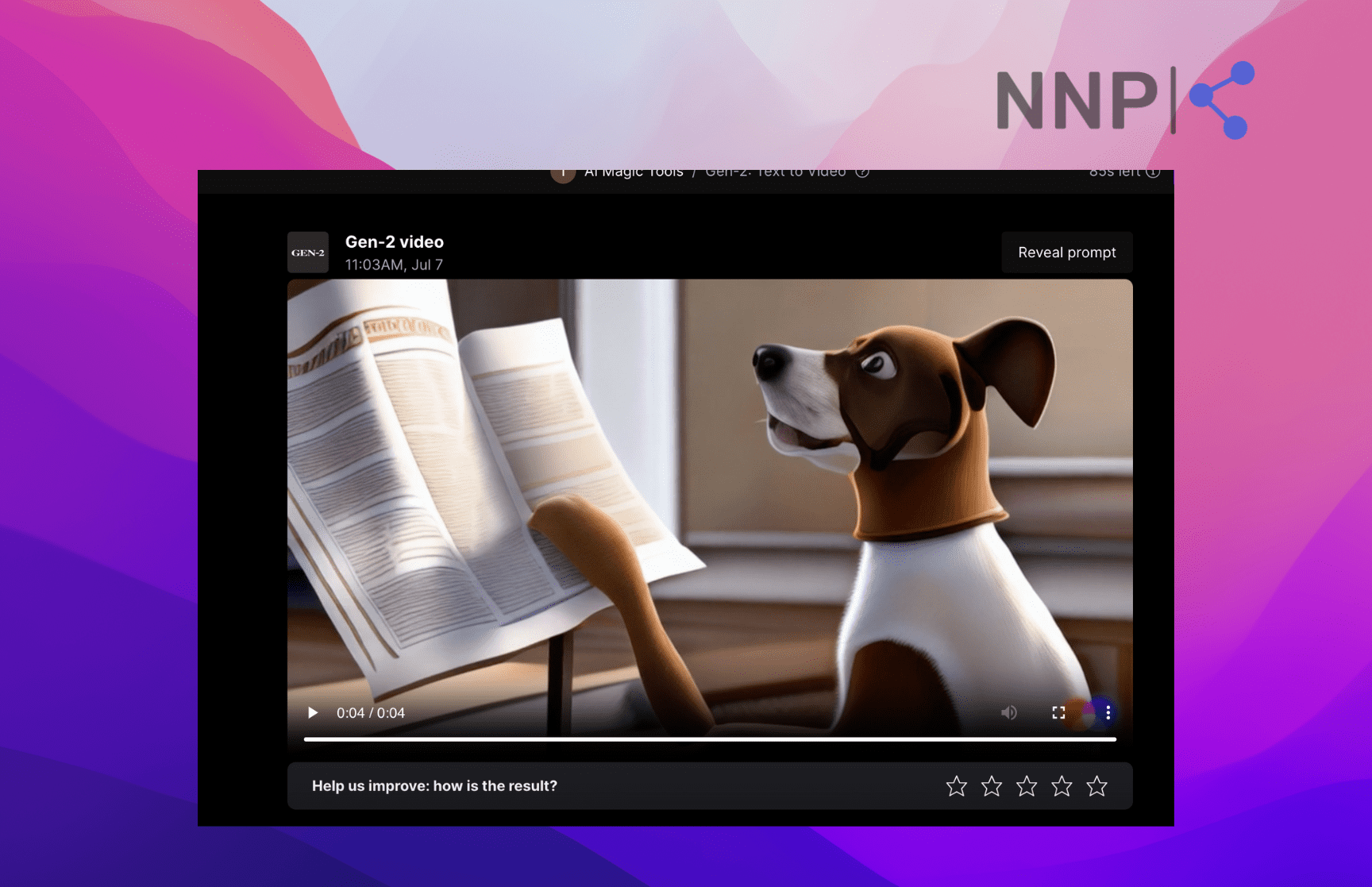

“An animation of a dog reading a newspaper.”

I got an animated video of a dog reading a newspaper. However, you can see from the screenshot below, there are many imperfections with the dog’s mouth, ears, and head. There’s also an inconsistency with the newspaper pages, one longer than the other.

Despite the imperfections, Runway’s Gen-2 model is an impressive step toward developing text-to-video AI. Although users and experts describe it as more of a novelty tool than a production tool, we are certainly seeing great potential in Gen-2 and what it will be able to do in the future.

Gen-2 limitations

Although Gen-2 is a remarkable development in text-to-video generation, it has a number of limitations.

- The videos are up to 4 seconds.

- The video quality could be better, displaying fuzziness and graininess.

- The model creates distorted human faces, usually inconsistent with anatomy and physics, producing doll-like, emotionless expressions with pasty skin.

- The AI video model struggles with understanding nuances and seems like it randomly takes into account certain modifiers and disregards others.

- The model's limited training data also contributes to generating inaccurate videos. For example, if it wasn’t trained on particular footage, like the underwater world, it may not be able to produce a satisfactory video.

- As expected, the Gen-2 model contains bias. Although Gen-2 is more diverse than other AI models, it still produces evidently biased videos. For example, for the prompt ‘nurse,’ the model typically presented a young white woman.

🔎 You might also like to explore 8 Midjourney Alternatives Worthwhile Trying.

Gen-2 plans and pricing

Runway offers a free plan and two paid tiers: Standard and Pro, which apply to all the other tools, not just Gen-2.

When you first sign into Runway, you get around 100 free seconds of content generation. It also offers other limited features, such as having only 3 video projects available, you can’t buy more credits, upscale or remove the watermark, limited video editor and image exports, 5GB of asset storage space, and slower video generation.

The paid Standard and Pro plans offer a lot more benefits in terms of the option to buy more credits, the option to upscale resolution and remove the watermark, unlimited video projects, up to 5 video editors, way more asset storage space, greater export options and of course, less wait time for video generation.

You can access all plans and benefits by clicking on the ‘Upgrade to Standard’ button at the bottom of the Runway dashboard's left-side panel.

Gen-2 customer reviews and ratings

Users are mixed when it comes to Gen-2 feedback.

Kyle Wiggers from TechCrunch had a go at Runway Gen-2. He finds the technology innovative but thinks it’s practically limited due to various flaws. The videos it creates are a bit choppy, almost like a slideshow, and the quality feels a bit rough around the edges, like an old-school Instagram filter, which often seems grainy, fuzzy, and surrealistic.

Despite Gen-2’s novelty and commercial availability, Wiggers ultimately sees the Gen-2 model more as a fun gadget or tool for creative experimentation rather than a robust solution for professional video workflows.

Similarly, Sean Captain from Tom’s Guide is intrigued by the possibilities of Runway Gen-2. He found the detail and stylization superior to rival apps, and admired its ambition, despite occasional surreal outcomes. However, he highlighted its inconsistency and warned about the potential cost of generating longer videos in the future, stating that for now, Runway Gen-2 is more of a glimpse into the future of AI in video generation rather than a fully polished product.

Gen-2 has received an average 3-star rating on Futurepedia. Users, in general, find it interesting and fun to play with, but highlight its flaws and limitations.

❇️ Did you know that AI can also create artistic QR codes? Learn how to do this in this blog post.

To wrap up

Runway's Gen-2 Video AI model is the first commercially available text-to-video model that can transform text prompts, images, and videos into personalized video content. It offers various tools for video and image manipulation, setting a new bar in the AI industry. However, the tool is not without its flaws, such as less-than-perfect video quality, distortion of human facial features, and certain biases in video output.

While users find it intriguing and potentially indicative of future AI trends, they emphasize that there's still room for significant improvements before it can serve as a comprehensive solution for professional video production.

-(2)-profile_picture.jpg)